We are witnessing a fast, global acceleration in the adoption of machine learning (ML) in actuarial work, to the point that by the time this article is published, new and surprising developments may appear in the horizon. ML tools, which seemed like experimental methodologies and techniques a few years ago, are increasingly being embedded in pricing, reserving, underwriting, claims and reporting processes. For a profession grounded in analytical rigor and trusted for its ability to quantify risks under uncertainty, these developments present both significant opportunities and profound challenges.

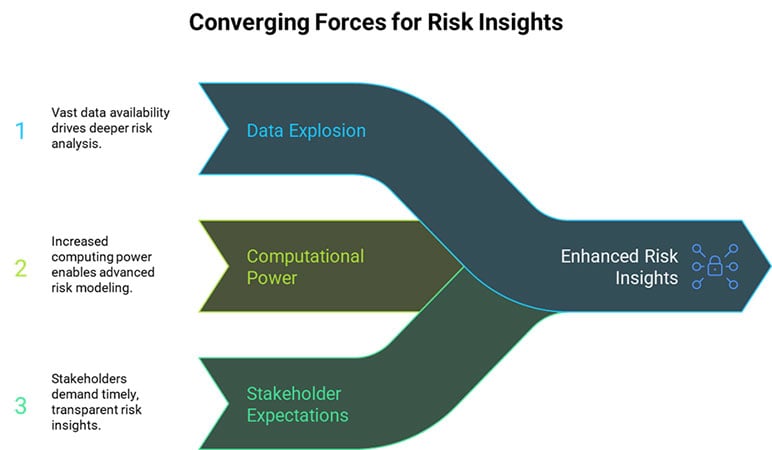

We are indeed witnessing three converging forces, as shown in Figure 1:

- The explosion of available data;

- Substantial increases in computational power; and

- Heightened expectations from regulators and stakeholders for more timely, transparent and sophisticated risk insights.

Figure 1

Converging Forces for Risk Insights

Source: Arocha & Associates GmbH.

In property and casualty (P&C), traditional techniques such as generalized linear models (GLMs) remain the standard, but they are now being complemented—and even challenged—by ML methods that capture nonlinear interactions between covariates.[1] Also the use of advanced ML models capable of generating coherent, humanlike content is starting to enable actuaries to shift their focus toward judgement-driven work that brings about higher value for their employers.[2] These advanced ML models belong to generative artificial intelligence, or GenAI. A few applications of GenAI are:

- Drafting reports and memoranda. Actuaries and other professionals are consistently using GenAI to help them write all sorts of content, from emails to reports, either from scratch or by running their work by a large language model (LLM) to improve its tone and style (and yes, grammar!).

- Improving business communications. It is now easier than ever to create slide decks, graphs, diagrams and all sort of visualizations that help actuaries convey insights and analyses.

- Automation of documentation. Actuaries devote significant time and resources in documenting their models and assumptions. GenAI can draft an initial report by outsourcing regulatory guidelines, prior reports, datasets and so on.

- Enhancing modeling. GenAI allows users to test model performance and accuracy in a variety of ways, for instance, by creating synthetic datasets that follow the actuary’s specifications.

This article examines how AI and automation are reshaping actuarial work globally. It explores current applications, emerging opportunities, key risks, governance considerations and the evolving skills required to use it effectively. Most importantly, it considers how actuaries can lead responsibly in this new environment, ensuring that technology enhances rather than undermines the profession’s core values of trust, integrity and public service.

Current State of Automation in Actuarial Practice

Actuaries have always adapted to advances in data and technology, as illustrated by the innovations in Figure 2. The Arithmeter (left) was developed in 1869 and sped up the calculation of life insurance reserves; the VisiCalc (right) was the first spreadsheet for computers in 1979. However, the current wave of automation is accelerating at an unprecedented pace. AI tools are moving from exploratory pilots to integrated components of actuarial work.

Figure 2

Key Milestones in Automation

Sources: Society of Actuaries; Wikipedia.

As already stated, the use of ML techniques in P&C is increasing, owing perhaps to the ability to incorporate more covariates, including telematic-generated data, into pricing. Research shows that ML applications such as gradient boosting machines (GBMs) and feed-forward neural networks (FNNs) can outperform traditional GLMs in predictive accuracy, especially when capturing complex nonlinearities in claims behavior.[3] In reserving, stochastic models augmented by ML are being explored to improve the estimation of outstanding insurance liabilities, though their adoption is more cautious due to regulatory requirements.

Other applications include the streamlining of underwriting processes by incorporating external data sources, natural language processing (NLP) and image recognition to assess risks more efficiently.

In claims, a natural application is the ability to detect fraud. Triage algorithms are being deployed to identify suspicious activity or prioritize complex cases for human intervention. These tools bring about operation cost savings.

In life and health, the adoption of AI tools has been somewhat slower than in P&C, perhaps due to longer time horizons and sensitivity around fairness and discrimination.

Despite so much progress, several obstacles have tempered the widespread adoption of AI. Some of these hurdles include model transparency, the apparent scarcity of actuaries with advanced ML skills, integration with legacy systems and regulatory uncertainty. However, AI and automation are actively shaping daily practice, with the degree of adoption differing across sectors and geographies.

Opportunities Presented by AI

Far from replacing actuaries, AI technologies create opportunities to expand professional scope, enhance predictive capabilities and deliver greater strategic value to organizations.

Data cleansing—a repetitive, low-value task—has been the main activity of many an intern, and even more seasoned actuaries have spent countless hours getting data ready for modeling processes. But other repetitive tasks, such as report generation, and regulatory filings consume significant actuarial time. By leveraging AI for these processes, companies are improving productivity, shifting high-value activities such as model design and risk interpretation to actuaries, who become effective business advisers.

In fact, the most transformative opportunity is the expansion of actuaries’ strategic influence. With AI accelerating routine analytics, actuaries can step into advisory roles that connect technical insights to business strategy. This includes supporting boards with forward-looking scenario analyses, stress tests that incorporate emerging risks such as cyber or climate change and capital allocation decisions. By bridging technical modeling with governance and strategic priorities, actuaries position themselves as indispensable advisers in an increasingly complex risk landscape.

The aforementioned opportunities suggest that AI is not a substitute for actuarial expertise but a catalyst for broadening its impact. When leveraged responsibly, AI can amplify the profession’s contribution to efficiency, predictive accuracy and strategic decision-making.

Challenges, Risks and Limitations

The adoption of AI and automation in actuarial practice is not without its complexities. Alongside efficiency, there are significant risks that must be addressed to preserve trust in actuarial outputs. These risks span technical, regulatory, ethical and organizational dimensions.

Let’s start with the black box problem. While models such as GBMs or FNNs often deliver superior predictive accuracy, their complexity can make it difficult for actuaries, regulators or policyholders to understand how outputs are generated. This lack of transparency is at odds with regulatory expectations for clear, explainable models in insurance pricing and reserving. Recent work emphasizes the need for explainability frameworks in ML applications, demonstrating that adoption without interpretability can undermine trust and regulatory compliance.[4]

Next, let’s move on to bias and fairness. Historical insurance data may reflect social and economic inequalities, which, if left unchecked, can be perpetuated or even amplified by ML models. At the end of the day, AI systems are only as unbiased as the data used to train them.

Now let’s dive into regulation. Regulators worldwide are sharpening their focus on AI and ML in financial services. For example, the European Union’s AI Act introduces strict requirements related to transparency, accountability and human oversight for high-risk AI applications. Similarly, actuarial associations and standard-setters are publishing draft governance frameworks that emphasize model life-cycle management, validation and ethical oversight.[5]

Finally, there are practical obstacles to AI adoption. Many actuarial teams lack in-house expertise in advanced ML, requiring investment in reskilling and cross-functional collaboration with data scientists. Resistance to change is another well-known barrier: actuaries accustomed to traditional models may be reluctant to embrace less familiar methods. Also, integration challenges with legacy IT systems further slow the transition from pilot projects to enterprise-scale adoption.

AI promises to enhance actuarial practice—even with its nontrivial risks. The actuarial profession’s credibility depends on addressing these limitations with rigor.

On Governance and Ethics

The integration of AI into actuarial practice demands more than technical proficiency. It requires a deliberate focus on governance, ethics and the evolving identity of the actuarial profession. As insurers, pension funds and regulators grapple with the implications of machine-driven decision-making, actuaries are uniquely positioned to shape frameworks that safeguard trust and fairness.

Robust governance is emerging as a nonnegotiable requirement for AI applications. The International Actuarial Association’s (IAA’s) Artificial Intelligence Task Force (AITF) has proposed a governance framework emphasizing the entire model life cycle, from design and data sourcing, through validation and monitoring, to decommissioning.[6] This approach reflects lessons learned from traditional actuarial control cycles and adapted for the complexities of AI. Of course, central to this governance is the principle of “human-in-the-loop,” ensuring that judgment and accountability remain paramount even when advanced algorithms are deployed.

AI raises ethical challenges that go beyond accuracy. Issues of fairness, transparency, accountability and data privacy are central to the legitimacy of actuarial models. For example, pricing models that unintentionally penalize vulnerable groups could undermine public trust in insurance. Actuaries, guided by professional codes of conduct, are well-placed to assess not just whether models are statistically valid, but also whether they are socially responsible.

As AI adoption grows, the actuarial profession has an opportunity to lead. By embedding governance and ethics into practice, actuaries can reinforce their credibility with regulators, employers and the public. More importantly, they can help ensure that the deployment of AI in insurance and pension funds advances efficiency and innovation without compromising fairness or trust.

Implications for Skills, Education and the Profession

The growing integration of AI into actuarial practice is reshaping not only workflows but also the competencies required of actuaries. To remain effective and relevant, the profession must evolve its skill base, educational pathways and professional development models.

AI-driven models demand proficiency that extends beyond traditional actuarial science. Actuaries increasingly need a working knowledge of machine learning techniques, coding skills in languages such as Python or R, and an understanding of data engineering and visualization tools. Equally important is literacy in areas such as model explainability, fairness assessments and algorithmic bias—competencies that bridge technical expertise with ethical accountability.

The requirements of actuarial credentialing bodies are beginning to reflect this shift. For example, both the Society of Actuaries (SOA) and the Institute and Faculty of Actuaries (IFoA) have introduced syllabus changes to include data science and predictive analytics modules. Continuing professional development (CPD) offerings are also increasingly focused on data science integration, AI governance and cross-disciplinary collaboration. These changes acknowledge that future actuaries must balance statistical rigor with digital fluency.

Another implication is the growing need for actuaries to work in cross-functional teams with data scientists, compliance officers, IT specialists and business strategists. Rather than competing with data scientists, actuaries are well-positioned to complement them—bringing strengths in risk management, long-term forecasting and regulatory compliance to AI projects.

Finally, as automation takes over routine actuarial tasks, there is a risk that some technical functions may become commoditized. This reinforces the need for actuaries to move up the value chain, focusing more on interpretation, governance and strategic advisory roles. By doing so, the profession can ensure that technology amplifies rather than erodes its relevance.

Recommendations and Best Practices

Harnessing the benefits of AI while mitigating its risks requires deliberate strategies. Actuaries can take several practical steps to embed AI responsibly in actuarial practice.

Adopt Hybrid Modeling Approaches

A pragmatic starting point is to blend AI methods with traditional actuarial techniques. Hybrid approaches leverage the predictive power of machine learning while maintaining the interpretability of GLMs and other established methods. This ensures regulatory acceptance and builds trust among stakeholders.

Implement Governance Frameworks

Governance must be integral to AI adoption. As previously stated, the IAA’s Artificial Intelligence Task Force recommends managing the entire model life cycle, including design, validation, monitoring and eventual decommissioning. Clear lines of accountability, robust documentation and independent oversight are critical to maintaining control and credibility.

Prioritize Explainability and Fairness

Actuaries should ensure that AI systems are explainable and fair. This includes using interpretable models where possible or deploying post hoc explanation tools for more complex algorithms. Regular fairness assessments can help identify and correct biases that may disadvantage vulnerable groups. By integrating these practices, actuaries reinforce the profession’s commitment to equity and public trust.

Invest in Training and Collaboration

Companies should invest in upskilling actuaries with data science and AI competencies, while also encouraging cross-functional collaboration. Partnerships between actuaries, data scientists and compliance experts can ensure that AI projects benefit from diverse expertise and meet regulatory standards.[7]

Engage with Regulators and Professional Bodies

Finally, proactive engagement with regulators and professional associations allows actuaries to influence emerging standards and best practices. Participation in working groups and industry initiatives positions actuaries as both users of AI and leaders shaping its responsible adoption.

Takeaways

AI is no longer speculative: it is rapidly becoming embedded in the actuarial toolkit. From pricing and reserving to reporting and governance, AI offers opportunities to increase efficiency, enhance predictive accuracy and elevate actuaries into more strategic advisory roles. At the same time, these benefits come with meaningful risks: model opacity, potential bias, data limitations and regulatory scrutiny. The actuarial profession’s response will determine whether AI strengthens or undermines its credibility. Actuaries are uniquely placed to lead this transition because of their grounding in risk management, ethics and public interest obligations. By adopting hybrid models, embedding governance, prioritizing explainability and engaging in cross-disciplinary collaboration, actuaries can ensure that AI enhances decision-making without eroding trust.

Perhaps most importantly, the rise of AI is reshaping the identity of the profession itself. No longer defined solely by technical modeling, actuaries are increasingly called on to act as stewards of fairness, interpreters of complex algorithms and advisers on strategic risk. This evolution is both a challenge and an opportunity. If embraced proactively, it positions actuaries not as passive AI users but as leaders guiding its responsible application in global financial security systems.

This article is provided for informational and educational purposes only. Neither the Society of Actuaries nor the respective authors’ employers make any endorsement, representation or guarantee with regard to any content, and disclaim any liability in connection with the use or misuse of any information provided herein. This article should not be construed as professional or financial advice. Statements of fact and opinions expressed herein are those of the individual authors and are not necessarily those of the Society of Actuaries or the respective authors’ employers.

Carlos Arocha, FSA, is founder and managing partner of Arocha & Associates GmbH. Carlos can be reached at ca@ArochaAndAssociates.ch.

Endnotes

[1] See, for example, Christoper Blier-Wong et al., “Machine Learning in P&C Insurance: A Review for Pricing and Reserving,” Risks 9, no. 1 (2021): 4, https://doi.org/10.3390/risks9010004.

[2] Anyone who uses ChatGPT, Claude, Perplexity and so on is already tapping into the realm of GenAI.

[3] See, for example, Carina Clemente, Gracinda R. Guerreiro and Jorge M. Bravo, Modelling Motor Insurance Claim Frequency and Severity Using Gradient Boosting, Risks 11, no. 9 (2023): 163, https://doi.org/10.3390/risks11090163, as well as Axel Gustafsson and Jacob Hansén, Combined Actuarial Neural Networks in Actuarial Rate Making [Degree Project in Mathematics], KTH Royal Institute of Technology, School of Engineering Sciences, Stockholm, 2021, https://kth.diva-portal.org/smash/get/diva2:1596326/FULLTEXT01.pdf.

[4] See, for example, Kevin Kuo and Daniel Lupton, “Towards Explainability of Machine Learning Models in Insurance Pricing,” Variance 16, no 1 (2023), https://variancejournal.org/article/68374-towards-explainability-of-machine-learning-models-in-insurance-pricing.

[5] See Jas Johal, “Balancing Act: Managing AI Governance Risks in Financial Services,” A&M, October 29, 2024, https://www.alvarezandmarsal.com/insights/balancing-act-managing-ai-governance-risks-financial-services.

[6] International Actuarial Association Artificial Intelligence Task Force, “Artificial Intelligence Governance Framework: General Actuarial Practice,” International Actuarial Association, 2024, https://actuaries.org/app/uploads/2025/05/AITF2024_G3_Governance_Framework_DRAFT.pdf.

[7] I wholeheartedly recommend perusing Mario V. Wüthrich et al, “AI Tools for Actuaries,” 2025, https://aitoolsforactuaries.org.